EU AI Act: A Practical Guide for AI-Powered Web Applications

A scannable, actionable guide to EU AI Act compliance for web applications using AI systems. Features a comparison table for Generic Business, FinTech, and HealthTech apps.

EU AI Act: A Practical Guide for AI-Powered Web Applications

The EU AI Act is here, establishing a new global standard for AI regulation. If your web application uses any AI systems (from chatbots and recommendation engines to computer vision and predictive analytics), compliance is not optional. This guide cuts through the complexity to give you a clear, scannable overview of your obligations.

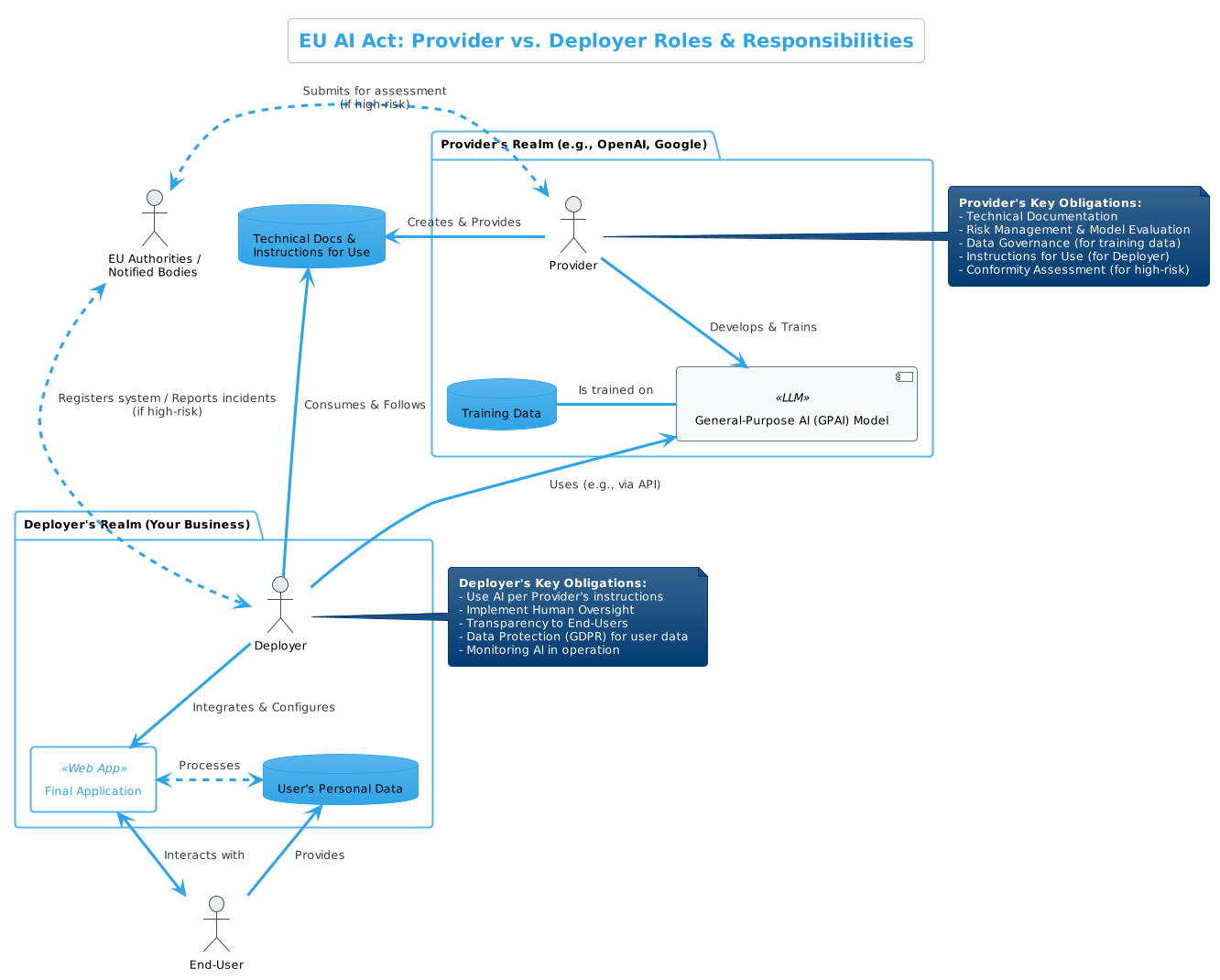

Understanding the Key Roles

Before diving into compliance requirements, it's important to understand the different roles defined by the EU AI Act and where your web application fits:

Key Distinction: Information vs. Consent

Understanding the difference between AI Act transparency notices and GDPR/ePrivacy consent is crucial for proper implementation:

AI Transparency Notice (AI Act): This is an informational requirement: a "Just so you know..." statement. You must clearly inform users they are interacting with AI, but they don't need to agree or take action. Think of it as a prominent, non-removable sign that says "AI in use." Users must see it, but don't need to consent to it.

Consent Pop-up (GDPR/ePrivacy): This is a permission request - a "May I?" question. Users must actively agree before you can process their personal data or place tracking cookies. They must click "Accept" for you to proceed.

Practical analogy: A consent pop-up is like a waiver you must sign before entering a race. An AI notice is like a warning sign at the track saying "Caution: Track may be slippery." You don't sign it, but organizers must ensure you see it.

Where to Put What: A Clear Separation

| Document / Location | Purpose | Your Action |

|---|---|---|

| Privacy Policy | Inform & Disclose (GDPR) | ADD all the detailed information about AI data processing, sub-processors (OpenAI, etc.), and data transfers. |

| Terms and Conditions | Define Rules & Contract (Business Law) | DO NOT add the privacy details here. DO add clauses on acceptable use, ownership of output, and liability disclaimers for AI features. |

| In-App UI | Real-time Transparency (AI Act) | ADD banners and labels like "You are chatting with an AI" or "Content generated by AI" directly in the interface. This is separate from your legal documents. |

Keep your documents clean and focused on their specific legal purpose. The Privacy Policy is your data transparency hub.

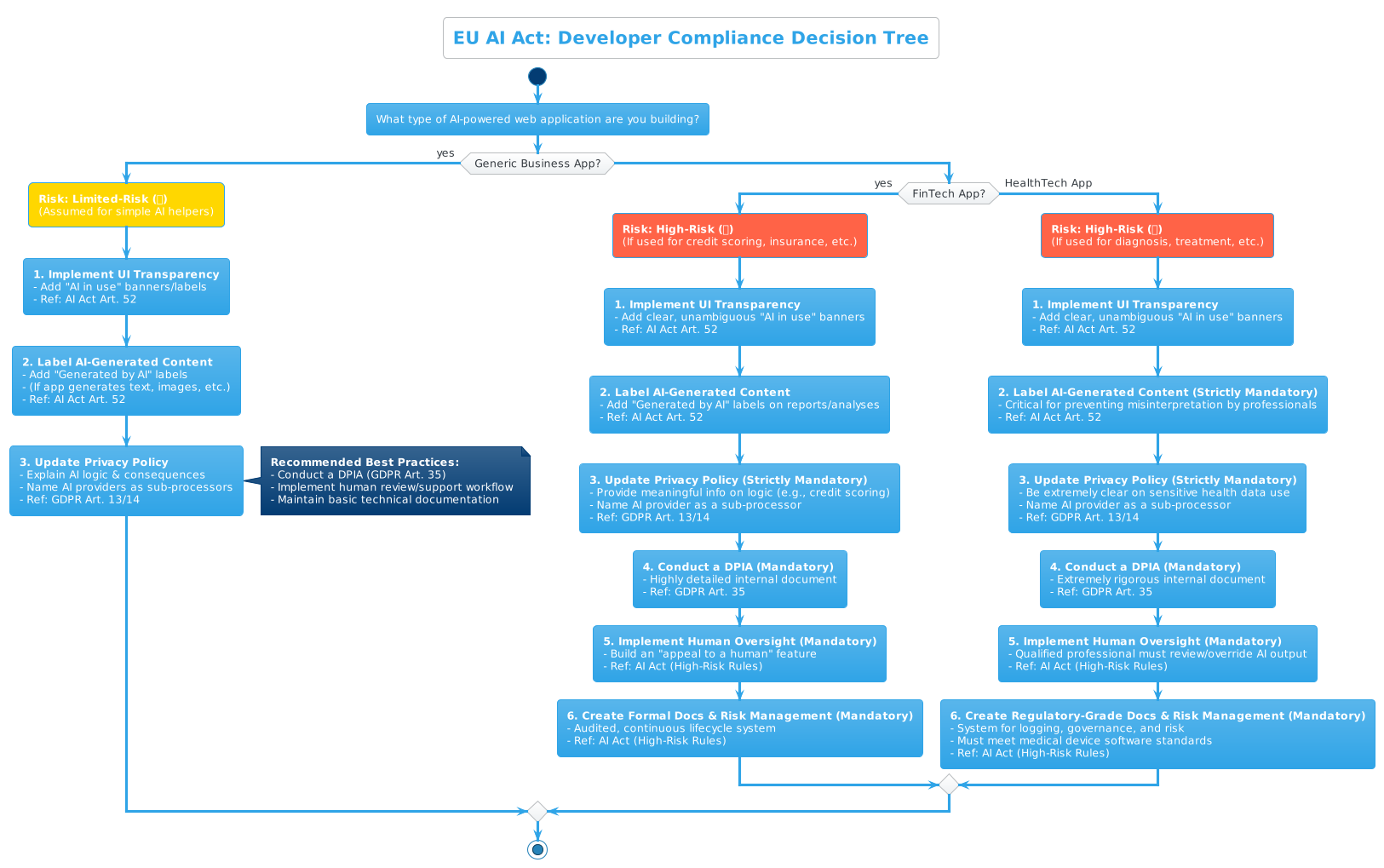

At a Glance: Compliance Needs by Industry

The AI Act uses a risk-based approach. Your obligations depend entirely on your app's industry and use case. Most generic business apps face minimal requirements, but the burden increases significantly for sectors like FinTech and HealthTech.

Risk Classification Legend

The Act applies a risk-based classification system to all AI systems:

- 🚫 Prohibited AI systems (unacceptable risk): Banned entirely.

- 🔴 High-risk AI systems: Strict compliance requirements.

- 🟡 Limited-risk AI systems: Transparency obligations mainly.

- 🟢 Minimal-risk AI systems: Voluntary compliance.

| Requirement / Actionable | Generic Business App | FinTech App | HealthTech App |

|---|---|---|---|

| Risk Classification (AI Act) | 🟡 Limited-Risk: Assumed default for simple AI helpers. | 🔴 High-Risk: If used for credit scoring, insurance, or access to finance. | 🔴 High-Risk: If used for diagnosis, treatment decisions, or as a medical device. |

| --- | --- | --- | --- |

| 1. AI Interaction Notice (AI Act Art. 52) | |||

| ➡️ Implementation Method: | In-UI, at the point of interaction (e.g., chat window). | In-UI, at the point of interaction. | In-UI, for both patients and medical professionals. |

| Requirement: | 🟡 Mandatory: Inform users they are interacting with an AI via a banner or persistent label. | 🟡 Mandatory: Must be clear and unambiguous. | 🟡 Mandatory: Must be clear and unambiguous. |

| --- | --- | --- | --- |

| 2. AI-Generated Content Labeling (AI Act Art. 52) | |||

| ➡️ Implementation Method: | A visible label ("Generated by AI") directly on or beside the content. | A visible label on any AI-generated reports, summaries, or analyses. | A visible label on any AI-generated diagnostic suggestions or summaries. |

| Requirement: | 🟡 Mandatory: If the app generates text, images, etc., for the user to see. | 🟡 Mandatory: If AI generates any user-facing content. | 🔴 Strictly Mandatory: Critical for preventing misinterpretation by professionals. |

| --- | --- | --- | --- |

| 3. Processing Transparency (GDPR Art. 13/14) | |||

| ➡️ Implementation Method: | A dedicated section in your Privacy Policy. | A detailed, clear-language section in your Privacy Policy. | A very detailed, explicit section in your Privacy Policy. |

| Requirement: | 🟡 Mandatory: Must explain the existence of AI, the logic involved, the consequences, and name any third-party AI providers as sub-processors. | 🔴 Strictly Mandatory: Must provide meaningful information about the logic of credit scoring models and name the AI provider as a sub-processor. | 🔴 Strictly Mandatory: Must be extremely clear about how sensitive health data is used by the AI and name the AI provider as a sub-processor. |

| --- | --- | --- | --- |

| 4. Data Protection Impact Assessment (DPIA - GDPR Art. 35) | |||

| ➡️ Implementation Method: | An internal, collaborative document (as per our DPIA template). | An internal, mandatory, and highly detailed document. | An internal, mandatory, and extremely rigorous document. |

| Requirement: | 🟢 Recommended: If processing personal data at scale. | 🔴 Mandatory: The use of new tech for credit scoring automatically triggers the need for a DPIA. | 🔴 Mandatory: Processing health data with AI automatically triggers the need for a DPIA. |

| --- | --- | --- | --- |

| 5. Human Oversight (AI Act - High-Risk) | |||

| ➡️ Implementation Method: | A user support/review workflow. | A built-in "appeal to a human" or "request human review" feature. | A built-in feature for a qualified professional to review, reject, or override the AI's output. |

| Requirement: | 🟢 Recommended Best Practice. | 🔴 Mandatory: User must have the right to contest an automated decision and get human intervention. | 🔴 Mandatory: The system cannot be fully autonomous for critical decisions. |

| --- | --- | --- | --- |

| 6. Technical Docs & Risk Management (AI Act - High-Risk) | |||

| ➡️ Implementation Method: | Internal documentation. | A formal, audited Risk Management System and comprehensive technical documentation. | A regulatory-grade system for risk management, logging, and data governance. |

| Requirement: | 🟢 Basic: Document your AI models and data. | 🔴 Mandatory: Extensive, continuous lifecycle obligations. | 🔴 Mandatory: Must meet the same standards as other medical device software. |

How to Implement Key Requirements

Let's break down the most common actionables from the table.

1. Implement Transparency (Mandatory for Most)

If users can "chat" with your app, you have a transparency obligation. This is the first major deadline and applies to everyone.

- Display Clear Disclaimers: Add a visible notice like "You are interacting with an AI assistant" or "AI-generated content" in the interface.

- Distinguish AI from Human: Ensure the UI design makes it obvious when content or responses are from an AI versus a human agent.

- Be Honest About Limitations: Include a brief explanation that the AI can make mistakes and that its advice should be verified.

2. Nail GDPR & Data Protection (Mandatory for All)

The AI Act works with GDPR, not against it. If you process personal data (and you almost certainly do), this is non-negotiable.

- Establish a Legal Basis: For most AI features, the safest legal basis is explicit consent. Ask users clearly if they agree to their data being processed by an AI for a specific purpose.

- Anonymize or Pseudonymize: Before sending data to an LLM, remove or replace personal identifiers whenever possible. This minimizes your risk.

- Conduct a DPIA: A Data Protection Impact Assessment is likely mandatory if you process sensitive data (health, finance) or perform large-scale profiling. It's a formal process to map out and mitigate privacy risks.

3. Plan for Human Oversight (Crucial for High-Risk)

For high-risk systems, you cannot have a fully autonomous "black box" making critical decisions.

- Implement a "Stop Button": A human must have the ability to halt or override the AI's operation at any time.

- Enable Human Review: For decisions like loan approvals or medical suggestions, a qualified person must review and validate the AI's output before it takes effect.

- Monitor and Log: Keep detailed logs of the AI's performance and decisions to enable effective human review and auditing.

4. Maintain Documentation (Scales with Risk)

Your documentation burden grows with your risk level.

- For Everyone: At a minimum, keep a record of which LLM provider you use (e.g., OpenAI, Anthropic), the model version, and its intended purpose in your app.

- For High-Risk: You need comprehensive technical documentation covering the system's architecture, data sources, training methodology (if applicable), testing procedures, and risk assessments. This is what regulators will ask for during an audit.

5. Anonymization of Data: The "Get Out of GDPR Free" Card?

Yes, it is not just relevant—it is one of the most powerful concepts in data protection, but it is also one of the most misunderstood.

The core principle is simple: The GDPR only applies to personal data. If data is truly anonymous, it is no longer personal data, and therefore, GDPR rules (like requiring a legal basis, data subject rights, or conducting a DPIA) do not apply to your use of that data.

This is why it's so attractive. You could theoretically train an AI on vast amounts of anonymous user data without needing consent for every individual.

However, there is a very high bar for data to be considered "truly anonymous" by EU standards.

- Anonymization: Irreversibly altering data so that the individual can no longer be identified, directly or indirectly. You must consider all means "reasonably likely" to be used to re-identify someone.

- Pseudonymization (Not Anonymous): Replacing identifying fields with a pseudonym or token (e.g., replacing "John Smith" with "User #12345"). This is not anonymous because the original data can be re-linked using a separate key. Pseudonymized data is still considered personal data and is fully under GDPR, but it is seen as a valuable security measure.

Is it in the EU AI Act?

The AI Act does not mandate anonymization. However, it is a critical enabling technique for complying with several of the Act's requirements for high-risk systems:

- Data Governance (Article 10): High-risk systems must be trained on data that is relevant, representative, and free of errors and bias. Anonymizing data can be a key part of the "privacy-preserving techniques" used to prepare these datasets.

- Risk Management: Using anonymous data significantly reduces the risk of data breaches and harm to individuals, which is a core part of the AI Act's risk management framework.

- Testing: The Act requires testing in real-world conditions. Using anonymized data for this testing can fulfill the requirement without creating new privacy risks.

In short: Anonymization is a GDPR concept, but it's a vital tool for meeting AI Act obligations safely and ethically.

Best Practice: While LLM providers provide strong contractual protections, you are far better protected—both legally and in terms of risk—if you anonymize or pseudonymize sensitive data before sending it. This is a recognized best practice under both GDPR and the EU AI Act.

6. Local SLM vs. Public LLM: The Fundamental Compliance Trade-Off

This choice fundamentally changes your compliance posture, your responsibilities, and your risk profile. An SLM (Small Language Model) is a model you can host yourself, while an LLM is a large-scale model you access via a public API.

Here is a breakdown of the key differences:

| Compliance Factor | 💻 Local SLM (Self-Hosted) | ☁️ Public LLM (API-based: OpenAI, Gemini, etc.) |

|---|---|---|

| Data Flow & Control | ✅ Maximum Control. User data is processed within your own infrastructure (on-premise or private cloud). It never leaves your environment. | ⚠️ Data is Sent to a Third Party. You are sending user prompts (which may contain personal data) to the LLM provider's servers for processing. |

| GDPR Role | You are the Data Controller. You are directly responsible for all processing. There is no third-party processor for the core AI logic. | You are the Data Controller. The LLM provider (OpenAI, Google) is your Data Processor. This creates a legal relationship. |

| Legal Agreements | Not applicable for the model itself. You just need to secure your own infrastructure. | 🔴 Data Processing Agreement (DPA) is Mandatory. You must have a GDPR-compliant DPA with the LLM provider. This is non-negotiable. |

| Data Transfers | ✅ No cross-border data transfer issues (assuming your servers are in the EU). | ⚠️ Potential for International Data Transfers. You must verify where the provider processes data (e.g., US). If outside the EU, the DPA must include SCCs or other valid transfer mechanisms. |

| Privacy Policy | Simpler. You just describe your own processing activities. | More complex. You must name the LLM provider as a sub-processor and explain that data is sent to them. |

| Risk of Data Misuse | Lower. The risk is limited to your own security measures. The data is not used to train any external models. | Higher. You must actively configure your account to opt out of having your data used for model training. You are relying on the provider's security and contractual promises. |

| AI Act Role | You are likely the Provider of the final AI system, as you have integrated the model and are making it available. You bear more of the AI Act compliance burden directly. | You are clearly the Deployer of a system provided by someone else. You rely on the Provider's documentation and instructions for use, but you still have your own deployer obligations (human oversight, transparency). |

| Cost & Effort | Higher upfront cost and technical effort to set up, maintain, and secure the model. | Lower upfront cost (pay-per-use API), but ongoing operational costs and significant legal/compliance overhead. |

Conclusion & Recommendation

Anonymization is a strategy, not a magic wand. If you can truly and robustly anonymize data before it hits an AI model, you significantly reduce your compliance burden under both GDPR and the AI Act. However, getting it right is difficult and requires technical expertise.

The SLM vs. LLM choice is a trade-off between control and convenience.

- Choose a Local SLM if you handle extremely sensitive data (e.g., health, legal), if your customers are in highly regulated industries, or if "data never leaves our servers" is a key selling point. You accept higher technical overhead for lower third-party risk.

- Choose a Public LLM if you need state-of-the-art performance, want to move quickly, and are prepared to handle the legal and compliance overhead of managing a third-party data processor. This requires careful vendor due diligence and legal review of their DPA.

For most startups and general business applications, using a Public LLM is the more common path due to its accessibility and power. However, it requires a mature approach to vendor management and transparency with your users.

A Note on Data Residency: The EU-U.S. Data Privacy Framework

A common misconception is that GDPR requires all personal data to be physically stored and processed within the EU. The law's actual goal is to ensure data is protected to a GDPR standard, regardless of where it is processed.

This is achieved through legal mechanisms like the EU-U.S. Data Privacy Framework (DPF). If a US company (like OpenAI, Google, or Anthropic) is certified under the DPF, you can legally transfer personal data to them as if they were in the EU. All major US cloud and AI providers are certified.

So why is hosting in the EU still considered a best practice?

- Simplicity: It removes the complexity of managing international transfer mechanisms.

- Customer Trust: Many European customers, especially large enterprises, have policies demanding their data be stored in the EU. It's a major selling point.

- Reduced Legal Risk: Frameworks like the DPF can be (and have been) challenged in court. Keeping data in the EU insulates you from this legal volatility.

So, What Is Your Job as a Startup?

You don't need to engage in thousands of "nicky picky" negotiations. Instead, you have a very clear, manageable set of tasks:

- Find the DPA: Go to the legal or trust center of your chosen LLM provider and locate their standard DPA for business services.

- Review the DPA: This is the critical step. You don't negotiate it, but you must read it to understand their commitments. Pay close attention to:

- Data Location: Where will they process your data? (e.g., US or EU). This is vital for international transfer rules.

- Sub-processors: Who else do they share data with to provide their service? They must list these sub-processors.

- Security Measures: What technical and organizational measures do they promise to have in place? (e.g., encryption at rest and in transit).

- Data Retention: How long do they keep your data after you send it? Can you request zero data retention?

- Formally Accept It: Follow their process to make the DPA legally binding for your account. Keep a record of this acceptance.

- Update Your Own Privacy Policy: You must now update your public-facing Privacy Policy to name your LLM provider as a sub-processor, explaining to your users that their data is shared with this third party for the purpose of providing the AI features.

"Under the Hood" AI Processing: No Free Pass

Important: Backend AI processing does not exempt you from compliance obligations: it simply shifts where and how you must comply.

Key Rules for Backend AI Processing

- For ALL backend processing of personal data: You must be transparent about it in your Privacy Policy (GDPR Art. 13/14)

- If the AI generates content shown to the user: You must label that content as AI-generated (AI Act Art. 52)

- If the AI makes significant decisions about a user: You must provide the right to human intervention and appeal (GDPR Art. 22) and likely comply with all high-risk rules under the AI Act

Summary Table: Which Rule Applies When?

| Scenario | AI Act Art. 52 (In-Context Notice) | GDPR Art. 13/14 (Privacy Policy) | GDPR Art. 22 (Right to Contest) | High-Risk AI Act Rules |

|---|---|---|---|---|

| Chatbot (Direct Interaction) | ✔️ Mandatory | ✔️ Mandatory | ❌ (Usually Not) | ❌ (Usually Not) |

| AI generates a summary for the user | ✔️ Mandatory (for the output) | ✔️ Mandatory | ❌ (Usually Not) | ❌ (Usually Not) |

| AI personalizes a news feed "under the hood" | ❌ No | ✔️ Mandatory | ❌ No | ❌ No |

| AI rejects a loan application "under the hood" | ❌ No (for the process) | ✔️ Mandatory | ✔️ Mandatory | ✔️ Mandatory |

Key Compliance Deadlines

- February 2, 2025: Transparency Rules Apply. All apps with chatbots or AI interaction must disclose it.

- August 2, 2025: GPAI Model Obligations. Rules for LLM providers (like OpenAI) take effect. As a deployer, you must ensure your provider is compliant.

- August 2, 2026: High-Risk System Rules Apply. If your app is high-risk, you must be fully compliant with all related obligations.

Conclusion: Your Compliance Path

For most generic business web apps, the path is straightforward: focus on transparency and solid GDPR compliance. Classify your system honestly, inform your users they're talking to an AI, and handle their data with care.

If you operate in FinTech or HealthTech, your journey is more demanding. Treat AI Act compliance as a core product requirement from day one, investing in risk management, documentation, and robust human oversight.

Example: Patient Analysis App The DPA with Anthropic is your legal starting line. It makes using their service possible. But for a HealthTech company, anonymization or at least robust pseudonymization is the professional, ethical, and legally safest way to operate. It demonstrates due diligence, adheres to the principle of data minimization, and protects what matters most: your patients' privacy and your company's trust.

Official Resources

For the complete legal text and authoritative guidance:

- Official EU AI Act Website: artificialintelligenceact.eu

- Official PDF: Regulation (EU) 2024/1689 - AI Act: The final text of the AI Act, available in English and German. This regulation applies from August 2, 2026 with some earlier exceptions.